Imagine a personal assistant who can not only answer your questions but also go out and find the most up-to-date information, make a plan, and then carry out a series of steps to get something done. That’s the difference Retrieval-Augmented Generation (RAG) is making for AI agents. It’s like giving them a key to the world’s biggest library, allowing them to stop just “remembering” and start “thinking” for real. Learn more about Agentic RAG in this blog post.

How RAG is Changing AI Agents?

Before RAG, an AI agent’s actions were often dictated by a pre-programmed script or the limits of its internal memory. They were reactive. A user would ask a question, and the agent would respond with the best information it had available, often leading to “hallucinations” or outdated answers.

RAG fundamentally changes this dynamic. It gives the agent a superpower: the ability to proactively seek out and verify information from the world outside its core model. This shift from a closed-book system to an open-book system is the key to unlocking true autonomy.

An autonomous agent, powered by RAG, doesn’t just answer a question; it embarks on a mission to solve a problem. Here’s a look at the core ways RAG is enabling this transformation:

1. Dynamic Planning and Tool Use

A truly autonomous agent needs to be able to plan a multi-step course of action. RAG provides the crucial missing piece in this planning process. For example, if an agent is tasked with “planning a trip to a city and finding the best restaurants,” it won’t simply make up a list.

It will use RAG to first search for “current top restaurants in [city name],” then retrieve and analyze recent reviews from reliable sources, and finally, synthesize this information to provide a detailed, up-to-date recommendation. RAG becomes a tool the agent can use at any point in its reasoning chain to validate or augment its plan.

2. Self-Correction and Factual Grounding

One of the biggest challenges with autonomous agents is their potential for errors. RAG acts as an essential self-correction mechanism. If an agent’s initial generated response is based on an assumption, it can be programmed to perform a retrieval step to “ground” its answer in an external knowledge base. If the retrieved information contradicts the agent’s initial thought, the agent can course-correct and generate a new, more accurate response. This iterative process of thinking, retrieving, and re-evaluating is the hallmark of a system that can reason for itself.

3. Handling Long-Tail and Niche Information

Traditional AI agents struggle with specific, obscure, or domain-specific questions. RAG gives them the ability to be experts on demand. For a specialized task, such as analyzing a company’s internal legal documents, an agent can use RAG to search a vector database containing those specific documents. This allows it to generate a response that is not only accurate but also deeply informed by the unique data of its environment, a feat impossible with a general-purpose model alone.

Also Read: Agentic RAG vs Traditional RAG

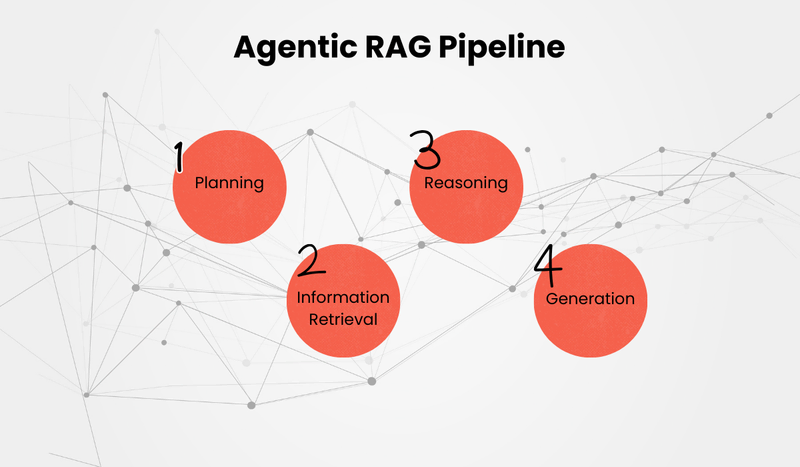

The Agentic RAG Pipeline: A New Architecture for Autonomy

The most advanced applications of RAG in autonomous agents don’t just use it for a single lookup. They embed it into a complex, multi-step pipeline that mirrors human reasoning.

- Planning: The agent receives a user prompt and analyzes it to create a high-level plan (e.g., “Step 1: Research, Step 2: Analyze, Step 3: Synthesize”).

- Information Retrieval (RAG): The agent identifies a need for external information and uses its RAG tool to query a knowledge base. It can perform multiple queries to gather all necessary context.

- Reasoning and Tool Use: The agent takes the retrieved information and uses it to execute a specific task, such as using a code interpreter to analyze data or a web search tool to find live information.

- Generation : The agent synthesizes all the gathered information and presents a final, well-grounded, and comprehensive response or takes a final action.

This “Agentic RAG” architecture is what is driving the next generation of AI applications, from hyper-personalized customer support bots that can access and understand a user’s entire history to scientific research agents that can summarize and cross-reference new academic papers in real-time.

The combination of RAG’s ability to provide timely, factual information and an agent’s ability to plan and act is a game-changer. It’s the difference between a tool that tells you what it remembers and a partner that can learn and solve problems in a dynamic world. The future of autonomous AI is here, and it’s powered by RAG.

The Big Picture: Why This Matters for All of Us

The move towards Agentic RAG isn’t just a technical upgrade; it’s a huge step forward for AI as a whole, leveraging us to develop AI agents that are:

- More Trustworthy: Because they can show you where they got their information (by providing a link or a document source), you can trust their answers more.

- More Capable: They can tackle more difficult jobs, from a financial agent analyzing live stock market data to a legal assistant summarizing new court filings.

- More Efficient: Companies can automate complex, knowledge-based tasks that used to require a lot of human effort.

In simple terms, RAG gives AI agents the ability to do what we do every day: find new information, make sense of it, and use it to solve problems. This is a game-changer that is moving us closer to a future where AI is not just a tool but a true partner in getting things done.

Frequently Asked Questions (FAQ)

Q: What’s the main difference between a regular AI agent and agentic RAG?

A: A regular AI agent is limited to the knowledge it was trained on, which can quickly become old. An agentic RAG can “go and look up” new and updated information from external sources, making it far more accurate and relevant.

Q: Does RAG get rid of “hallucinations”?

A: It greatly reduces them. Because a RAG agent bases its answers on specific, verifiable documents or data, it is much less likely to make up facts or generate misleading information. It gives the AI a source of truth to stick to.

Q: Is RAG an alternative for building an AI model?

A: No, it’s a partner. RAG doesn’t replace the core AI model’s ability to reason and understand language. Instead, it makes the model’s job easier and its outputs better by providing it with the most accurate and current information possible, without the need for expensive and time-consuming retraining.