Enterprises today are navigating an increasingly complex AI landscape. From Large Language Models (LLMs) that can generate text, summarize documents, and automate customer support, to generative AI systems capable of producing realistic images, video, and audio, the choices are expanding rapidly. Understanding generative AI vs LLM is essential for businesses that want to adopt AI strategically and extract maximum value from these technologies.

Although LLMs fall under the broader category of generative AI, they differ significantly in purpose, architecture, and application. LLMs specialize in language tasks, excelling at understanding context, reasoning, and generating coherent text. Generative AI, in contrast, covers multiple modalities, enabling enterprises to create content across visual, audio, and multimodal domains. Recognizing these distinctions helps decision-makers select the right AI solution for their unique needs.

In this post, you will gain a clear understanding of the difference between generative AI and LLMs, where they overlap, and how to make informed decisions about which model to use for your specific enterprise needs.

Core Definitions and Concepts

Generative AI

Generative AI refers to any artificial intelligence system capable of producing new content by learning patterns and structures from existing datasets. Unlike predictive AI, which classifies or forecasts, generative AI creates something entirely new that resembles the original data.

How it works

Generative AI models learn the statistical relationships and structure within a dataset. For instance:

- In text, the model learns which words or phrases frequently appear together.

- In images, it learns pixel patterns, shapes, textures, and object relationships.

- In audio, it learns patterns in pitch, rhythm, or voice timbre.

When generating content, the model uses this learned structure to produce outputs that are coherent and realistic relative to the training data.

Key architectures

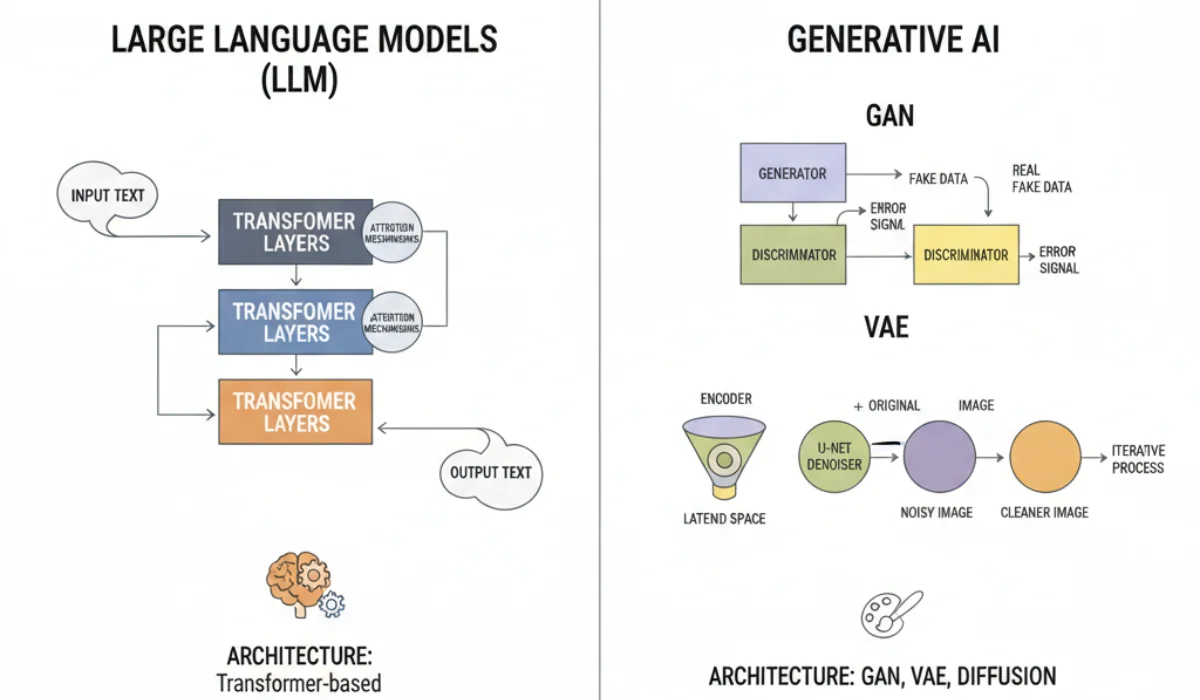

1. Generative Adversarial Networks (GANs):

- Consist of two networks: a generator that creates content and a discriminator that evaluates it.

- They compete in a zero-sum game: the generator improves to fool the discriminator, and the discriminator improves to catch fake content.

Applications: High-quality image synthesis, video deepfakes, product visualisation prototypes.

2. Variational Autoencoders (VAEs):

- Encode input data into a compressed latent space, then decode it to reconstruct content.

By sampling from the latent space, VAEs generate variations of the input data.

Applications: Synthetic datasets for simulations, design variations, anomaly detection.

3. Diffusion Models:

- Start from random noise and iteratively refine it to produce structured content.

- Popular in image generation tools like DALL·E, Stable Diffusion, and MidJourney.

Applications: Marketing images, concept art, visual prototyping.

4. Transformer-based models:

- Originally designed for language, now extended to multimodal generation (text, image, audio).

- Use self-attention mechanisms to capture relationships between elements in the input data.

Applications: Multimodal content assistants, AI-powered creative tools.

Practical enterprise examples:

- Marketing teams generating social media campaigns with multiple visual variations.

- Product design teams create hundreds of concept renderings from specifications.

- Simulation teams generating synthetic data for training and testing autonomous systems.

Large Language Models (LLMs)

LLMs are specialized generative AI models trained to understand and generate human language. While generative AI can create any type of content, LLMs focus exclusively on text-based tasks, including structured text like code.

How they work

LLMs are typically based on the transformer architecture, which uses self-attention mechanisms to understand the relationships between words in a sentence or across paragraphs. Key characteristics:

- Self-supervised learning: Models predict the next word (or token) in a sequence without requiring labeled data.

- Fine-tuning: After pretraining, models are refined using human feedback to improve alignment with business requirements, safety, and accuracy.

- Context awareness: LLMs maintain context over long sequences, allowing them to summarise documents, answer questions, or hold coherent conversations.

Scale and capabilities

- Parameters: Modern LLMs range from hundreds of millions to trillions of parameters. Larger models capture more nuanced patterns and generate higher-quality outputs.

- Training data: Text from books, articles, code repositories, conversation logs, and proprietary datasets.

Applications of LLMs:

- Customer support chatbots capable of answering questions based on company knowledge bases.

- Automated content generation for marketing, reports, or internal documentation.

- Code assistants converting human instructions into functional programming code.

- Summarisation tools condensing lengthy reports into actionable insights.

Architecture and Training Differences Between Generative AI and LLM

When comparing generative AI vs LLM, it’s helpful to start with how these models are structured and trained, because their design determines what they can do and how they perform in real-world applications.

Generative AI is a broad category that includes models for creating images, video, audio, or text. For instance, GANs (Generative Adversarial Networks) use a generator and a discriminator working together: one creates outputs while the other evaluates them, improving quality over time.

Variational Autoencoders (VAEs) compress input data into a compact representation and then reconstruct it, allowing the creation of multiple variations of the original content. Recently, diffusion models have gained popularity for producing high-resolution images, starting from random noise and gradually refining it into detailed visuals.

LLMs, on the other hand, are designed specifically for text. They rely on transformer architectures with self-attention mechanisms that track relationships between words across long sequences. Models like GPT focus on generating coherent text, while encoder-decoder models such as T5 or BART handle tasks like summarization or translation. The scale of LLMs, ranging from billions to trillions of parameters, enables them to understand context, nuances, and intent in human language.

The training processes highlight another key difference. Generative AI models are trained on datasets that match their content type and often need significant computing power for fine-tuning. LLMs start with pretraining on massive text corpora, then undergo fine-tuning for domain-specific applications. Techniques like reinforcement learning with human feedback (RLHF) help align outputs with real-world expectations and reduce errors.

Practical takeaway for businesses:

- Generative AI is ideal when you need to create images, videos, or other rich media content.

- LLMs are best for tasks that involve understanding, generating, or analyzing text.

- The choice affects infrastructure, compute needs, and deployment strategy.

Understanding these differences between generative AI and LLM ensures your organization chooses the right model for its specific goals, avoiding wasted resources and improving results.

Also Read: How Top Companies Are Integrating Generative AI in Their Business

Use Cases and Enterprise Decision Framework

Enterprises often struggle to decide whether to deploy a Large Language Model (LLM), a broader generative AI model, or a combination of both. The choice should be guided by the type of content to generate, the business objective, and the technical requirements. .

Use Cases for LLMs

LLMs excel in tasks involving language understanding and generation. Their ability to process large volumes of text and maintain context over long sequences makes them ideal for applications such as:

- Customer support automation: LLMs can interpret queries from multiple channels and generate relevant responses, reducing support costs and response times.

- Content summarisation: Enterprises can use LLMs to condense reports, emails, or legal documents into concise, actionable summaries.

- Code generation and documentation: LLMs can translate natural language instructions into code snippets, create boilerplate code, or generate documentation, boosting developer productivity.

- Internal knowledge management: LLMs can answer questions using company-specific data, allowing employees to access insights without manual search.

Enterprises seeking to automate customer support or generate business content can leverage LLM development services to build models tailored to their domain.

Where to Use Generative AI

Generative AI extends beyond text to images, video, audio, and multimodal content. Applications include:

- Marketing and creative content generation: Companies can invest in Generative AI development to create unique marketing visuals or interactive experiences.

- Simulation and design: Produce multiple design variations, virtual prototypes, or synthetic datasets for testing purposes.

- Audio and voice synthesis: Generate realistic voiceovers, music, or sound effects for media production or training simulations.

Multimodal assistants: Combine text, images, and audio generation for richer customer experiences, such as virtual tutors, interactive presentations, or immersive product demos.

How to Choose

Choosing the right AI model requires balancing business objectives, technical requirements, and risk management. A practical framework involves three steps:

- Define the task clearly: Identify the content type and expected output. Ask whether the problem is language-centric, multimodal, or both.

- Assess data availability and quality: LLMs require extensive text data, while generative AI may require image, audio, or multimodal datasets. The availability of clean, labelled, or proprietary data will influence whether you can fine-tune a model effectively.

- Evaluate operational feasibility: Consider infrastructure, latency requirements, integration complexity, and cost. For example, a high-resolution image generation pipeline may demand more GPUs and storage than an LLM-based summarisation service.

By following this framework, enterprises can map their use case to the right AI model, reducing the risk of overspending and misaligned deployments. Importantly, some applications may require a hybrid approach. For instance, a customer-facing virtual assistant might combine an LLM for text understanding with a generative AI model to produce product images or diagrams on demand.

Risks, Governance, and Implementation Checklist

Deploying generative AI or LLMs at scale is not without risk. Enterprises must address operational, ethical, and regulatory challenges to ensure AI adoption delivers value safely and reliably. Understanding these risks and establishing governance processes is critical before production deployment.

Key Risks

Output quality and hallucinations: LLMs can generate text that is plausible but factually incorrect, while generative AI models producing images or video can create misleading or unrealistic visuals. Inaccurate outputs can lead to misinformed decisions, brand damage, or compliance breaches.

Bias and fairness: Both LLMs and generative AI inherit biases present in their training data. For LLMs, this may appear as biased recommendations or language; for generative AI, it may manifest in visual stereotypes or exclusionary representations. Enterprises must actively detect and mitigate these biases.

Data privacy and security: Training or fine-tuning models on proprietary data requires careful management. Sensitive information may unintentionally appear in generated outputs if governance controls are not in place. This is especially important in sectors such as healthcare, finance, and legal services.

Operational and infrastructure challenges: High compute requirements for large models can strain budgets and infrastructure. Real-time applications, such as chatbots or recommendation engines, require optimised pipelines to minimise latency without compromising quality.

Governance Considerations

Effective governance ensures AI outputs are accurate, ethical, and compliant. Key practices include:

- Establishing clear model ownership and accountability within teams.

- Implementing human-in-the-loop review for high-risk outputs.

- Conducting regular bias audits and updating training data to address systemic issues.

- Applying data governance policies to control access, protect sensitive information, and comply with regulations such as GDPR or HIPAA.

- Tracking and logging AI decisions to provide auditable transparency for stakeholders.

What to Ensure Before Applying Generative AI or LLM

A structured checklist can help enterprises integrate generative AI and LLMs efficiently and safely:

- Define objectives clearly: Specify business goals, expected outputs, and performance metrics.

- Select the right model: Choose between LLMs, generative AI, or a hybrid approach based on task requirements and modality.

- Assess data readiness: Ensure access to high-quality training or fine-tuning datasets. Clean, structured, and compliant data improves output reliability.

- Plan infrastructure and compute: Estimate GPU, storage, and cloud requirements for both training and inference.

- Establish monitoring protocols: Track performance, detect anomalies, and monitor bias and accuracy continuously.

- Deploy human oversight: Define points in the workflow where human review is essential, particularly for sensitive or customer-facing outputs.

- Maintain compliance: Ensure that the model and its outputs adhere to legal, ethical, and corporate standards.

Following this checklist helps organisations mitigate risks while maximising the benefits of AI deployment, whether for text, visual, audio, or multimodal applications.

Summary and Key Takeaways

Understanding generative AI vs LLM is crucial for any business looking to use AI effectively. Generative AI covers multiple content types (images, video, audio, and text) while LLMs focus specifically on understanding and generating language. Each comes with its own architecture, training methods, and ideal use cases, whether it’s automating customer support and generating content with LLMs, or creating visuals and simulations with generative AI.

Choosing the right approach depends on the type of content you need, your business goals, and operational considerations. Many organizations find value in a hybrid strategy, combining LLMs and generative AI to create more versatile, impactful solutions. By taking LLM development services, businesses can deploy AI responsibly and reliably, unlocking tangible benefits and measurable outcomes.

FAQs

Is generative AI the same as LLM?

No, generative AI and LLMs are not the same, though they are related. Generative AI is a broad category of AI models designed to create new content such as text, images, audio, or video. Large Language Models (LLMs) are a subset of generative AI focused specifically on understanding and generating human language. LLMs excel at tasks like text summarization, content generation, translation, and conversational AI, while generative AI can handle multiple modalities beyond text.

What is the difference between LLM and GPT?

LLM (Large Language Model) is a general term for any AI model trained on massive text datasets to understand and generate language. GPT (Generative Pretrained Transformer) is a specific type of LLM developed by OpenAI. GPT models are transformer-based, pretrained on diverse text corpora, and fine-tuned for text generation tasks. In short, all GPTs are LLMs, but not all LLMs are GPTs, other examples include BERT, T5, and LLaMA.

Is LLM better than AI?

This is not a direct comparison because LLMs are a type of AI. Saying LLM is “better than AI” is like asking if a smartphone is better than electronics, it depends on the task. LLMs excel at text and language-related tasks, but for image generation, audio synthesis, or other AI applications, other generative AI models or AI systems may be more suitable. Effectiveness depends on the use case and model design.

What are the 4 types of AI?

AI is commonly classified into four types based on capabilities and functions:

- Reactive Machines: Basic AI that reacts to inputs without memory, e.g., IBM’s Deep Blue.

- Limited Memory: AI that uses historical data to make decisions, e.g., self-driving cars.

- Theory of Mind: AI capable of understanding human emotions and intentions—mostly experimental today.

- Self-Aware AI: Hypothetical AI that possesses consciousness and self-awareness; not yet realized.

These types describe the evolution of AI capabilities, from simple task execution to potentially autonomous, self-aware systems.