Generative AI model development is now a critical skill for data scientists, ML engineers, and AI researchers looking to create systems that produce original content such as text, images, code, or audio. Building your own generative AI model allows you to customize outputs, optimize performance, and gain full control over data and inference pipelines. If your team lacks the required expertise, you might consider hiring generative AI developers to accelerate development and ensure production-ready solutions.

This guide is for those who want to move beyond pre-built AI tools and create their own generative systems. It covers everything needed to take a generative AI project from concept to production deployment, focusing on model selection, technical setup, data pipelines, training, and real-world deployment.

Understanding Generative AI

Generative AI focuses on creating new content rather than analyzing existing data. These systems learn patterns from large datasets to produce original outputs while maintaining realistic characteristics. Core capabilities include:

- Text: Human-like text for chatbots, content creation, and code generation.

- Images: Photorealistic images, style transfer, or artistic outputs.

- Audio: Music, speech synthesis, or sound effects.

Modern systems often support multimodal generation, which involves processing multiple data types simultaneously, such as image captioning or text-to-image synthesis.

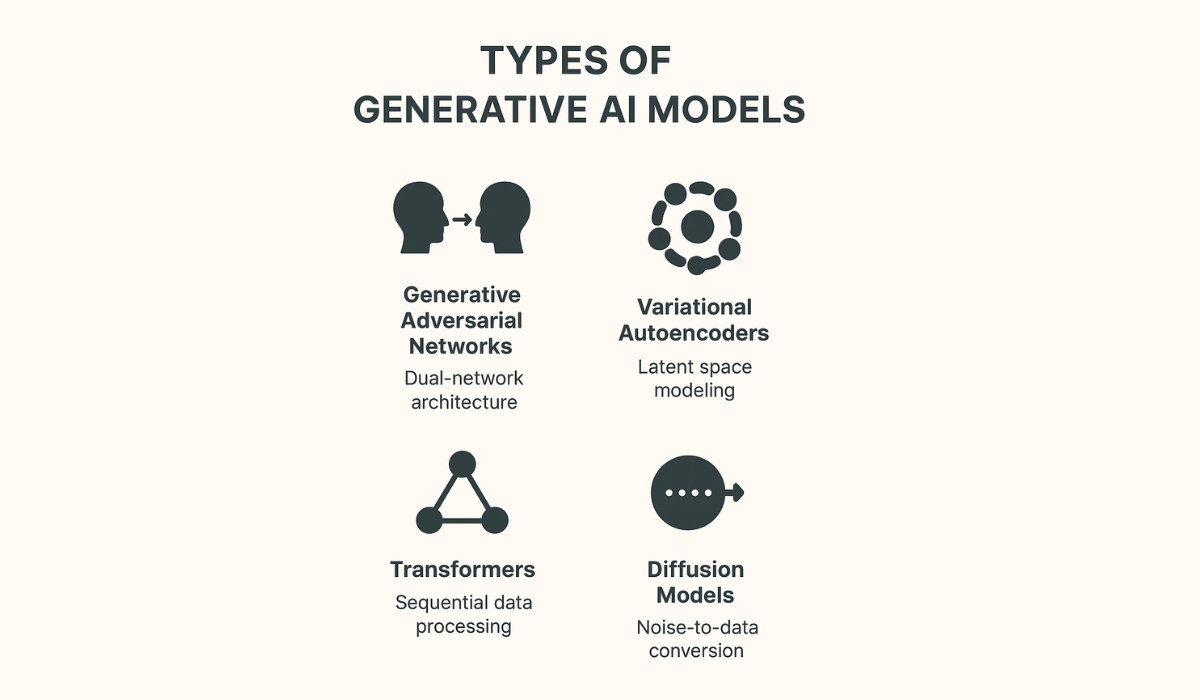

Compare Different Generative Model Architectures

The landscape of generative model architectures offers several distinct approaches, each with unique strengths and applications. Understanding these differences is essential for the successful development of generative AI models.

- Generative Adversarial Networks (GANs) employ a dual-network architecture where a generator creates fake data while a discriminator attempts to distinguish real from generated content. This adversarial training process produces high-quality outputs, particularly for image generation. GANs excel at creating photorealistic images but can suffer from training instability and mode collapse issues.

- Variational Autoencoders (VAEs) combine encoding and decoding networks with probabilistic modeling. These architectures compress input data into a latent space representation before reconstructing it. VAEs provide stable training and enable controlled generation through latent space manipulation, though outputs may appear somewhat blurred compared to GANs.

- Transformer-based models have revolutionized text generation and increasingly dominate other domains. These attention-based architectures process sequential data effectively and scale well with increased model size and training data. Large language models like GPT demonstrate the power of transformer architectures for text generation, while recent adaptations show promise for image and multimodal generation.

- Diffusion models represent the newest breakthrough in generative modeling. These systems learn to reverse a noise-adding process, gradually transforming random noise into structured content. Diffusion models produce exceptionally high-quality outputs and offer stable training, making them the current state-of-the-art for image generation applications.

Projects requiring advanced architectures like transformers or diffusion models often demand specialized skills. Organizations may choose to hire generative AI developers to bring in experience with large-scale model training and deployment.

Evaluate Computational Requirements and Constraints

Generative AI models demand substantial computational resources, making cloud platforms essential for most development scenarios. The choice between major cloud providers depends on specific requirements, budget constraints, and existing infrastructure.

GPU Instance Recommendations:

- AWS EC2 P4d instances: Equipped with NVIDIA A100 GPUs, ideal for large-scale transformer training

- Google Cloud TPU v4: Optimized for TensorFlow workflows, excellent price-performance ratio

- Azure NC series: Balanced options with NVIDIA V100 and A100 GPUs

Also Read: 12 Best AI Agent Frameworks You Must Use

After the Preparation, Make a Data Strategy

High-quality data is very important for developing generative AI models, so

- Gather datasets from repositories (Hugging Face, Kaggle), industry sources, internal data, or web scraping with legal compliance. Ensure completeness, accuracy, and representativeness.

- Clean and normalize text, images, numerical, and categorical data using Pandas, NumPy, OpenCV, or regex pipelines.

- Data augmentation increases dataset diversity: rotate/flip images, paraphrase text, adjust audio pitch or inject noise.

- Split datasets: ~70% training, 15% validation, 15% testing, using stratified or time-aware sampling.

- Versioning and tracking: Use DVC or MLflow to link models to specific data snapshots for reproducibility.

Find the Best Model Architecture

Select a model based on your task:

- Transformers for text

- GANs/Diffusion for images

- VAEs for structured outputs.

- Design layers with residual/skip connections and attention mechanisms.

Test Model Outputs Against Quality Benchmarks

Establishing proper evaluation metrics forms the backbone of successful generative AI model development. Quality benchmarks provide objective measures to assess whether the model produces outputs that meet specific standards and user expectations.

Quantitative Metrics for Text Generation

- BLEU Score: Measures similarity between generated text and reference texts, particularly useful for translation and summarization tasks

- ROUGE Score: Evaluates recall-oriented aspects, focusing on how much reference content appears in generated outputs

- Perplexity: Indicates how well the model predicts sequences, with lower values suggesting better performance

- BERTScore: Leverages contextual embeddings to provide more nuanced similarity measurements

Image Generation Quality Assessment

- Inception Score (IS): Evaluates both quality and diversity of generated images

- Fréchet Inception Distance (FID): Compares distributions of real and generated images

- Structural Similarity Index (SSIM): Measures perceived quality based on luminance, contrast, and structure

- Learned Perceptual Image Patch Similarity (LPIPS): Assesses perceptual similarity using deep features

Creating comprehensive test suites requires collecting diverse datasets that represent real-world scenarios. These datasets should include edge cases, varying complexity levels, and different input types to ensure robust evaluation across multiple dimensions.

Also Read: 9 Best Programming Languages to Build AI Apps

Deploy the Model & See Its Performance

Deploy with Docker/Kubernetes for scalable, consistent environments. Create APIs or user interfaces (such as FastAPI, Flask, or Streamlit) for enhanced accessibility.

Monitor latency, throughput, output quality, and detect data drift. Utilize human feedback, A/B testing, and logging to drive continuous improvement. Implement update pipelines, version control, and rollback mechanisms to ensure seamless updates and effective management. Regular retraining ensures models stay accurate and aligned with evolving business needs.

However, deploying models at scale, ensuring robust monitoring, and setting up retraining pipelines can be complex. Hiring generative AI developers can help implement best practices and accelerate time-to-value.

Conclusion

Successful generative AI development requires attention to each stage: model selection, architecture design, data strategy, training, evaluation, and deployment. High-quality data and careful preprocessing have a direct impact on the quality of the output. Proper architecture, hyperparameter tuning, and transfer learning improve performance, while production-ready optimization ensures scalability and efficiency.

Treat generative AI as an iterative process, continuous monitoring, retraining, and improvement lead to models that reliably meet business objectives and real-world demands.

FAQs

Are generative AI models expensive to train?

Costs depend on model size, dataset volume, and computational resources. Transformers and diffusion models can be resource-intensive; however, careful planning, cloud optimization, and leveraging pre-trained models can significantly reduce training time and cost.

Can businesses develop generative AI models in-house?

Yes, but building and deploying a generative AI model requires specialized expertise in model architectures, data engineering, and cloud infrastructure. Many organizations hire generative AI developers to accelerate development, ensure quality outputs, and deploy production-ready systems efficiently.

When should I consider hiring generative AI developers?

If your project requires complex architectures, large datasets, cloud-based training, or production-grade deployment, hiring generative AI developers brings specialized skills that save time, reduce errors, and accelerate ROI.

Can businesses develop generative AI models in-house?

Yes, but building and deploying a generative AI model requires specialized expertise in model architectures, data engineering, and cloud infrastructure. Many organizations hire generative AI developers to accelerate development, ensure quality outputs, and deploy production-ready systems efficiently.

What is the cost of developing a generative AI model?

The cost varies based on complexity, data, and resources. Small prototypes can start around ₹10–30 lakhs (~$12k–$36k), while enterprise-grade models may exceed ₹50 lakhs (~$60k), including cloud, tools, and developer expertise.