Many people assume Agents and LLMs are the same thing or that an AI Agent is just a “more advanced” version of a model like ChatGPT or Gemini. This confusion makes it hard to distinguish between AI agents and LLMs. We are here to clear up this confusion, explain how each one works, and when to use each.

What is an LLM?

In simple terms, an LLM is a highly capable text generator trained on massive collections of text and code. Systems like ChatGPT, Gemini, and Claude are enormous neural networks trained on massive datasets. At their core, they work by predicting the next statistically likely token, whether that’s a word, part of a word, or punctuation.

What makes them impressive is that repeating this prediction process thousands of times allows them to create paragraphs of text, summarize long documents, translate languages, analyze data, and even generate complex code. Anything that involves understanding or producing language is where LLMs can be used.

Core Capabilities of Large Language Models

Large language models are powerful cognitive tools because they can adapt to many language-related tasks. They excel at:

- Content Generation: Creating articles, marketing content, or story drafts.

- Summarization and Analysis: Turning long reports, documents, or email threads into concise summaries.

- Question Answering and Knowledge Retrieval: Responding with clear explanations, not just giving links.

- Data Transformation: Converting raw text into structured formats like bullet points, tables, or JSON.

But even with all of these capabilities, LLMs operate inside a closed box. They handle one prompt at a time and then stop. They provide brilliant responses, but only when the user executes the process. They don’t take initiative on their own.

What is an AI Agent?

If an LLM is like a brilliant conversational partner, an AI Agent is more like a proactive assistant who can interpret your goals and complete tasks for you.

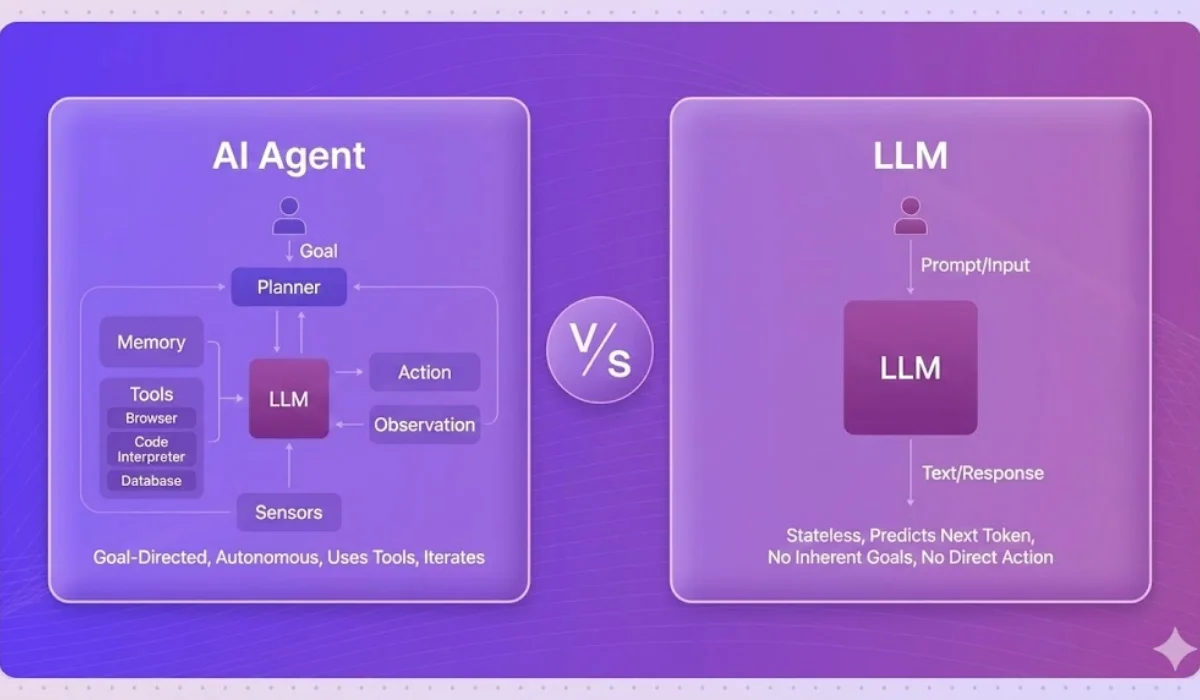

An AI Agent uses an LLM as its “brain,” but adds the ability to interact with the outside world, remember past actions, and complete multi-step processes without you prompting it every time.

Think of an AI Agent as someone who doesn’t just suggest what to do, they actually do it. Instead of only drafting an email, they send it. Instead of offering flight options, they book one, update your calendar, and store the receipt.

Key Components of an AI Agent

What separates an AI Agent from a standalone LLM is its full architecture, which gives it autonomy and the ability to act:

- The LLM Core (The Brain): Provides reasoning, planning, and language understanding. It helps the Agent interpret your goal and figure out the steps needed.

- Memory (Context and Learning): Unlike an LLM’s short conversation window, an Agent can store information.

- Short-Term Memory: Holds details about the current task.

- Long-Term Memory: Stores preferences, rules, past experiences, and performance, allowing the Agent to improve.

- Planning and Reasoning (The Strategist): Given a broad goal like “Plan my Berlin trip next month,” the Agent breaks it down into steps and works through them one by one.

- Tools and Actions (The Hands): This is the biggest difference between LLMs and AI agents. LLMs generate text on their own, but agents use tools like APIs, databases, browsers, spreadsheets, and email systems to take real action in the world.

Also Read: Generative AI vs LLM

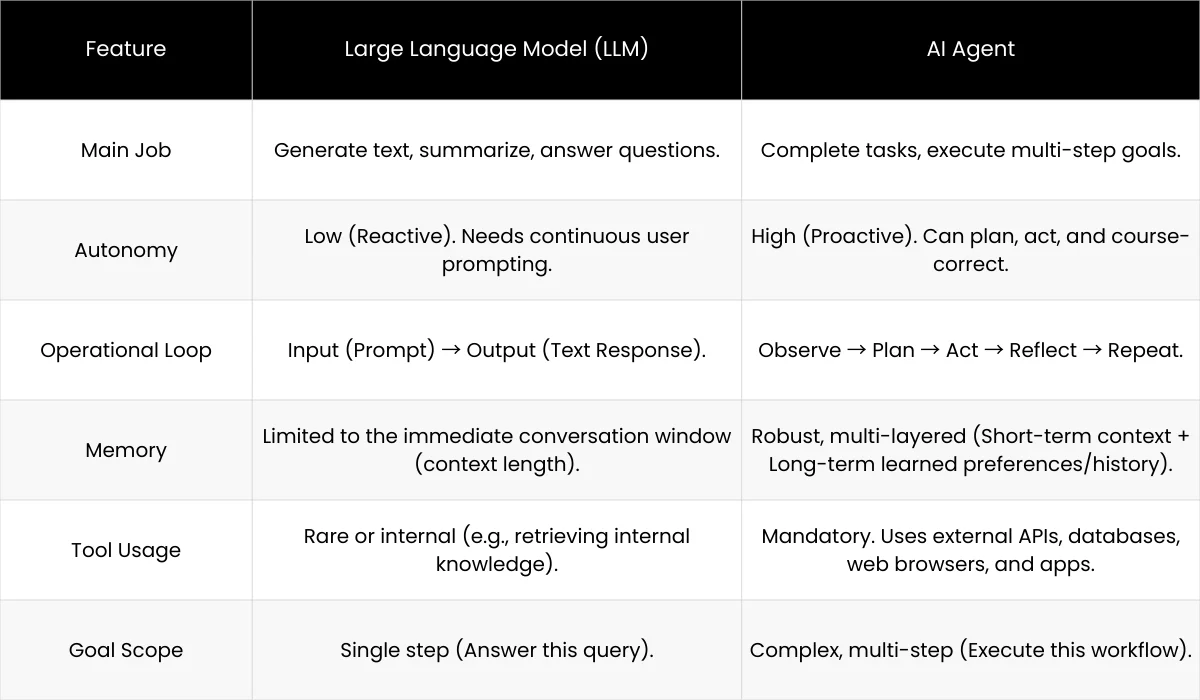

The Key Differences Between LLM and AI Agent

Although an LLM often serves as the core intelligence inside an Agent, the two operate in very different ways. The table and breakdown below show the major differences between a reactive LLM and an autonomous Agent.

1. LLMs work step-by-step; Agents work until the goal is done.

LLMs follow a simple pattern: they wait for your input and then give an output. They stop as soon as the response is generated.

Agents run in a loop until the task is complete. They can reflect on mistakes, adjust plans, and take new steps without you stepping back in. If a flight search fails, it can automatically switch APIs and try again. This ability to iterate and self-correct is part of what defines an Agent.

2. LLMs produce text; Agents produce completed tasks.

An LLM can tell you how to do something, or even write code to do it, but it cannot actually execute the code.

An Agent has built-in tool-use abilities. It can issue commands to software systems, click buttons, run scripts, and access third-party apps. This is the difference between knowing how to perform a task and actually performing it.

3. LLMs remember only what fits in a finite window; Agents remember across time.

LLMs have limited context windows and can “forget” earlier parts of a conversation once it gets too long.

Agents, however, are designed with persistent memory. They remember preferences, business rules, and past outcomes. This allows them to act consistently and behave more like reliable coworkers who understand your workflow.

Also Read: AI Agents vs Agentic AI

Example of LLM and AI Agent

A company using an FAQ answering chatbot that uses LLM might rely on it to answer internal questions. For example, an employee may ask, “What’s our remote work stipend policy?” The LLM searches the relevant information, summarizes the policy, and provides a helpful explanation. The task ends there, useful, fast, accurate, but ultimately limited to delivering information.

Now consider the same company using an AI Agent. When a customer refund request comes in, say, for order #12345, the Agent doesn’t just explain the refund process. It carries it out. It checks the order details, verifies the return, issues the refund through the finance system, sends a confirmation email to the customer, and updates the CRM with the completed action. The entire workflow is completed automatically.

Conclusion

If you take only one idea away from this exploration of AI agent vs LLM, let it be this simple principle: The LLM is the thinker; the AI Agent is the doer.

The Large Language Model is reactive intelligence, brilliant at interpreting language and generating content, but reliant on the human prompt for every single step.

Whereas the AI Agent is proactive and autonomous. It uses the LLM’s intelligence to reason, plan, use tools, and execute complex, multi-step goals without continuous human oversight.

Knowing the difference between the two is very important. For individuals, it means the difference between using AI to write a task list and using AI to complete the tasks on the list. For businesses, it means transitioning from using AI for creative assistance to deploying AI for end-to-end automation of critical, high-value workflows.

To stay competitive, businesses and individuals must recognize that the real value of the next generation of AI lies in these autonomous, action-oriented Agents.

FAQs

Are AI agents just LLMs?

No. AI Agents are built on top of LLMs, but they are much more than just a language model. LLMs generate text, answer questions, and provide information. AI Agents use that intelligence to take action, plan multi-step tasks, interact with external systems, and complete workflows automatically. Think of LLMs as the “brain” and Agents as the “brain plus hands” that can execute tasks in the real world.

Is there a difference between LLM and AI?

Yes. LLMs are specific types of AI focused on understanding and generating human language. They are reactive, meaning they only respond when prompted. AI, as a broader term, includes LLMs but also encompasses systems that can perceive, reason, plan, and act, like AI Agents, computer vision models, robotics, and other intelligent systems. In short, all LLMs are AI, but not all AI is an LLM.

What is the difference between LLM, AI Agent, and RAG?

- LLM (Large Language Model): Generates text and answers questions based on patterns it has learned. It cannot act on the world by itself.

- AI Agent: Uses an LLM as its reasoning core, but also plans, takes actions, uses tools, and completes multi-step workflows. It’s autonomous and proactive.

- RAG (Retrieval-Augmented Generation): Combines an LLM with a retrieval system. When asked a question, it searches a database or knowledge source, retrieves relevant information, and feeds it into the LLM to generate accurate, context-aware answers. Unlike an AI Agent, RAG does not automatically act in the real world; it enhances LLM output with information.