The newest generation of AI, Generative AI (GenAI), can write code, create images, and draft full reports. It’s a game-changer for businesses, boosting productivity and transforming customer service.

But this incredible power comes with big risks, mostly related to data. When these models use and create massive amounts of information, the old rules of data governance stop working.

For any company using Large Language Models (LLMs), strong AI data governance isn’t just a legal hoop to jump through; it’s the only way to innovate without fear. This guide will walk you through how governance has changed, explain the key risks, and give you a simple, 5-step plan to secure your AI processes.

How is Data Governance Different When You Use Generative AI?

Traditional data governance was easy: it focused on managing static, organized data, like records in a database. It was about defining who owned that data and how to access it. Generative AI complicates everything by adding dynamic data flows and complex systems that are hard to see inside.

Governance now focuses on four key areas:

- Training Data (The Source): This is the huge collection of data used to build the AI model. Governance must track where this data came from, who licensed it, and check for bias or copyright issues before the model is used.

- Prompt Data (The Input): This is the information users type into the AI. The rule here is simple: stop people from feeding highly sensitive, confidential, or private customer data (PII) into the prompt box, especially when using third-party AI tools where your data might be saved or used for future training.

- Output Data (The Generation): This is the new content the AI creates. It’s often synthetic, but it can accidentally expose training data or simply “hallucinate”, creating false but believable information. Governance needs rules to check, label, and classify this new data.

- Model Lifecycle (The System): The model itself is now part of governance. This means keeping track of model versions, monitoring its performance over time (making sure it doesn’t get “dumber”), and keeping clear records of every single AI interaction.

The core difference is moving from managing data that sits still to managing data that moves and data that is created.

Why is Data Governance Important in the Era of Generative AI?

The need for strong data governance in generative AI is urgent because it directly impacts your business stability and legal safety. For instance, if your AI tools are unreliable, your business suffers immediately, here’s how:

- If the AI often spits out copyrighted content, biased text, or false facts, people will stop trusting the tool. This pushes them toward unsafe, unmanaged “shadow AI” use, or they just stop using AI altogether.

- If you don’t control the quality of the data used to train the AI or the data people put in, you get “garbage in, garbage out.” Unreliable results cancel out any productivity gains.

Without clear governance rules, employees have to manually check everything the AI produces. This added friction eliminates the speed and efficiency that GenAI is supposed to provide.

Also Read: How Top Companies Are Integrating Generative AI in Their Business

Risks of Generative AI without Data Governance

- Privacy Risk

However, GenAI solutions can also possess risks unlike traditional software such as:

Privacy Exposure (Data Leakage): If employees use company secrets or customer PII in their prompts, that sensitive information could be absorbed by the model and possibly exposed to another user later. This is a critical security hole.

Hallucination and Misinformation: AI models sometimes make things up with high confidence. If these “AI lies” are used in critical reports or public statements, it creates a massive reputation and liability risk.

Copyright and IP Protection: It’s hard to prove where generated content originated. If your AI output accidentally copies copyrighted material, or if your proprietary IP is used by a third-party model, you face significant legal battles.

- Compliance Risk

Besides these risks, there’s a greater risk with generative AI as governments around the world are quickly writing rules for AI:

The EU AI Act: This law forces companies using “high-risk” AI systems to meet strict standards for safety, transparency, and data quality.

NIST AI Risk Management Framework (RMF): This is a helpful guideline from the US government on how to identify, assess, and manage all your AI-related risks, with a heavy focus on governance.

Industry Standards: Rules in healthcare (like HIPAA) or finance (like PCI DSS) now require specific controls for how sensitive data interacts with AI. Good governance is your proof of compliance.

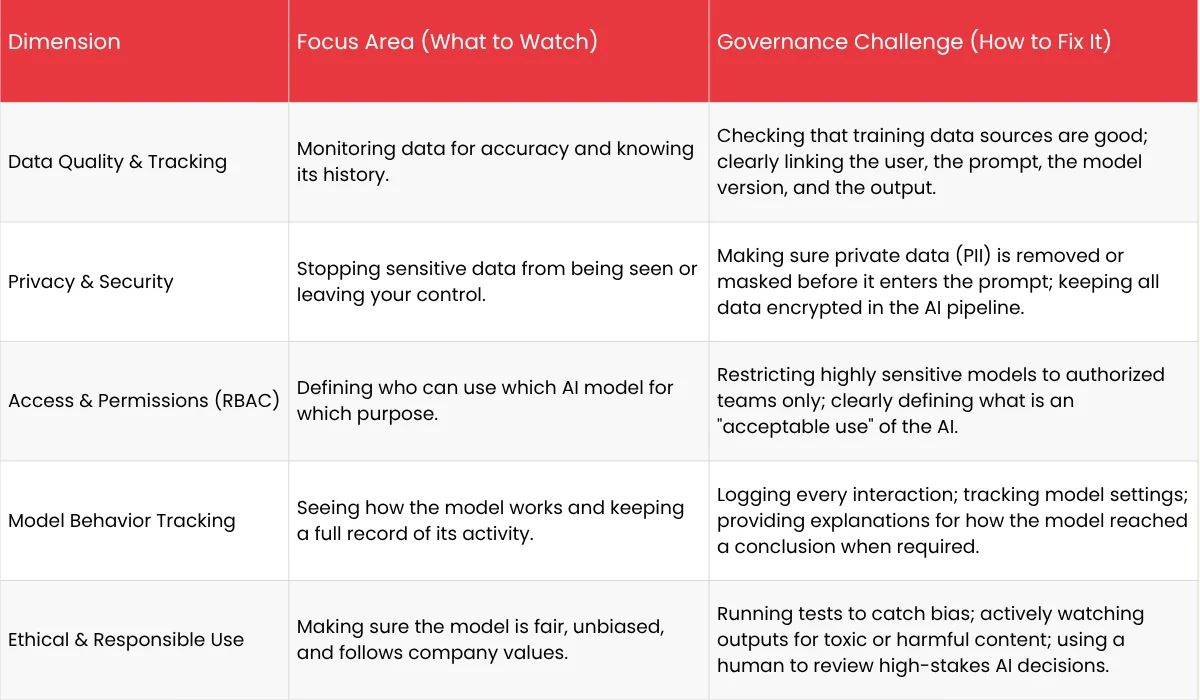

The Key Areas for Applying Data Governance in Generative AI

Effective GenAI data governance requires teamwork across security, legal, and data science teams. These five areas are where you need to focus your effort.

5-Step Framework for AI Data Governance

You need a clear, systematic approach to manage AI risk. This 5-step framework for AI data governance moves from basic classification to constantly checking your systems, ensuring your guardrails are solid and current.

Stage 1: Classify and Prioritize Data

You can’t protect data if you don’t know what it is. So, start with data classification and prioritization. Here’s how you can do it.

Map Sensitive Data: Identify and label all data interacting with your AI based on how sensitive it is (e.g., Public, Internal, Confidential, Restricted).

Set Clear Rules: Create strict rules for each category. Example: “Private customer data must never be used in a public AI prompt,” or “Company financials can only be used with our internal, private AI model.”

Automated Redaction: Put tools in place to automatically find and hide sensitive information from a user’s prompt before the AI sees it.

Stage 2: Define Policy and Usage Controls

Turn your classification rules into technical roadblocks.

- Prompt Filters: Use rules to scan and block prompts that contain keywords, sensitive patterns (like credit card numbers), or language that violates your company policy.

- Output Scanners: Deploy mechanisms that check the model’s output for leaks of intellectual property or inappropriate content (e.g., hate speech) before it reaches the user.

- Role-Based Access Control (RBAC): Only allow specific, verified people to use sensitive models. For example, the HR team might be the only group authorized to use a model fine-tuned for salary planning.

Stage 3: Secure the Entire AI Process

Your security architecture must cover the whole data flow, end-to-end.

Data Encryption: Make sure all data is encrypted when it’s being sent (in transit) and when it’s being stored (at rest) within the AI environment.

Isolation and Sandboxing: Keep your confidential models and data separate from general systems using protected cloud environments or internal ‘sandboxes.’

Vulnerability Management: Regularly check and patch the infrastructure—including model hosting and API gateways, for security weaknesses.

Stage 4: Monitor and Validate Model Behavior

Governance requires constantly checking that the AI is performing well and acting responsibly.

- Bias and Fairness Testing: Run systematic tests to ensure the model isn’t making unfair decisions based on user characteristics like gender or ethnicity.

- Drift Monitoring: Keep an eye on model drift, how the model’s performance slowly worsens or changes its outputs over time, and set alerts for when it needs to be fixed or retrained.

- Tracking History: Automatically record key details for every AI interaction: the user, the time, the exact prompt, the model version used, and the final output. This gives you complete data lineage.

- Hallucination Rate: Measure how often the model gets facts wrong in known test cases. This helps manage the risk threshold for using the model.

Stage 5: Audit and Continuously Improve

The last stage is about being accountable and ready to adapt.

Complete Logging and Reporting: Maintain clear, unchangeable logs of all governance actions, like when a prompt was blocked or an output was flagged. Generate compliance reports regularly.

Compliance Verification: Periodically test your policies against global rules (like GDPR) to ensure they still meet regulatory requirements.

Feedback Loop: Create a simple way for users to report when the AI gives a wrong, biased, or harmful answer. This user feedback is vital for making immediate policy updates and improving the model over time.

How Do Standard Frameworks (Like NIST and the EU AI Act) Make AI Safer?

Using formal AI governance frameworks gives you a standard rulebook to follow. By using guides like NIST or the EU AI Act, your company can move from simply reacting to problems to proactively managing risk.

These external guides help you connect regulations to practical action:

- Security and Transparency: Frameworks force you to document every part of your system, data sources, model design, and deployment. This documentation directly helps fight data leakage by creating a traceable data flow that can be checked (audited).

- Accountability: Frameworks require you to define clear roles, such as a Model Owner or an AI Risk Officer. This ensures that if the model causes harm (like a security breach or biased output), someone is clearly responsible.

- IP Protection: These frameworks guide you toward technical steps like only running your proprietary models inside a secure private network (VPC) to protect your intellectual property from external exposure.

Practical Steps to Reduce AI Risk

Pre-Launch Testing (Red-Teaming): Before a new AI model goes live, governance requires “red-teaming.” This means a specialized group tries to trick the model into producing sensitive or harmful outputs. This helps you find and fix vulnerabilities before deployment.

Mandatory Checkpoints: Frameworks enforce “stage-gates” where the model must pass checks for data quality, security, and fairness before moving from testing to production.

Input/Output Cleaning: They require layers of security that act as “airlocks,” checking every prompt for confidential content and checking every output for policy violations before the interaction is completed.

What are the Most Common Mistakes in AI Data Governance?

Many organizations struggle with AI data governance. Knowing these common errors can help you build a stronger, more lasting program.

- Treating Governance as an Afterthought: The biggest mistake is trying to add governance at the end of a project. Governance must be built in from day one, using a “security-by-design” approach. Trying to force rules onto a completed system is expensive and creates huge gaps in compliance.

- Blindly Trusting Vendors: Just because a third-party model provider promises compliance doesn’t mean your work is done. Your organization is still legally responsible for how your employees use the tool and what sensitive data they input. You must set your own specific prompt controls.

- Lack of Tracking: Not logging the exact prompt and output creates a complete gap in your security record. Without a clear trail, you can’t track down the source of a data leak or monitor how the model is changing, severely limiting your data lineage capability.

- Inconsistent Rules: Allowing different departments (like Marketing and Engineering) to use different rules for the same AI model leads to confusion and risk. Governance requires a single, unified policy that is enforced everywhere.

- Ignoring Model Drift: Since AI models are constantly interacting with new data, their performance can degrade over time (model drift). If you ignore this, the governance rules you wrote based on the model’s initial behavior will quickly become useless, leading to unreliable and riskier outputs.

Conclusion

Generative AI unlocks huge opportunities, but it significantly increases your data risk. Data Governance for Generative AI is not a roadblock; it is the safety net that allows you to move forward quickly and securely.

By shifting your governance strategy from static rules to dynamic pipeline oversight, and by following a simple, structured blueprint, you can build strong, adaptable guardrails. Data governance is the fundamental key to navigating the GenAI era and driving true innovation with confidence.

FAQs

-

Does Softude offer AI-driven data governance consulting?

Softude does provide consulting that touches the core of AI-driven data governance. Their teams help organizations assess data readiness, establish compliant AI usage practices, and implement security, privacy, and transparency controls across AI workflows.

-

How to implement AI data governance frameworks in a mid-sized company?

Implementation should be iterative, starting with setting clear objectives and defining the project’s scope. It requires establishing foundational data governance policies first, clearly assigning accountability roles across key departments (like Legal, IT, and Business), and then scaling the framework gradually.

-

What are the best AI data governance platforms for enterprise use?

Top enterprise platforms that leverage AI to automate data governance tasks such as classification, quality monitoring, and lineage tracking are Collibra, Informatica Axon, Atlan, and Alation.